Table of Contents

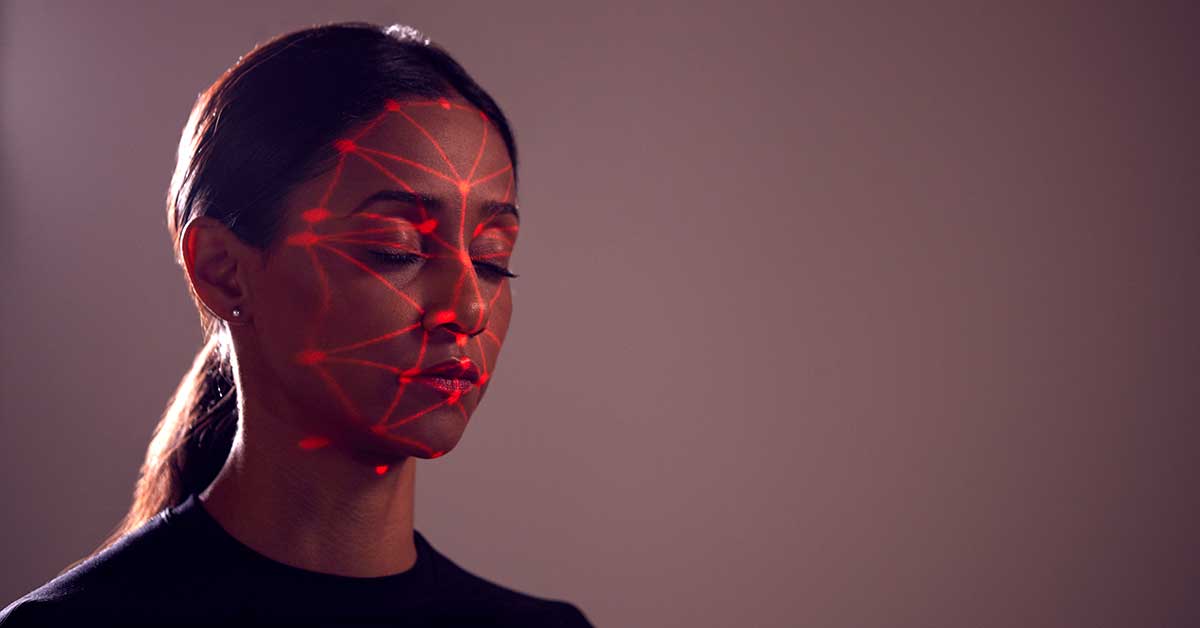

In the digital age, the proliferation of artificial intelligence (AI) has revolutionized content creation, enabling the generation of hyper-realistic images, videos, and audio—commonly known as deepfakes. While this technology holds potential for creative and educational applications, it has increasingly been weaponized to fabricate misleading or harmful content. Alarmingly, over 500,000 deepfake videos and audio recordings were disseminated on social media platforms in 2023, a figure projected to escalate exponentially in the coming years (University of Florida News, 2024). This surge poses significant threats to individual reputations, societal trust, and personal safety.

The Proliferation and Impact of Deepfakes

Prevalence of Deepfakes on Social Media

Deepfakes have become a pervasive element of online content. A recent study indicates that 60% of consumers encountered a deepfake video within the past year, underscoring the widespread nature of this phenomenon (Eftsure, n.d.). These AI-generated manipulations often involve altering photographs to enhance attractiveness or superimposing individuals’ faces onto explicit material without consent. Such malicious uses not only distort reality but also inflict severe emotional and psychological distress on victims.

Consequences of Deepfake Misuse

The ramifications of deepfake technology are profound and multifaceted:

- Reputational Damage: Individuals have suffered irreparable harm to their personal and professional lives due to deepfake content. For instance, in January 2024, AI-generated explicit images falsely depicting Taylor Swift were circulated online, leading to widespread outrage and discussions about the ethical implications of such technology (Taylor Swift deepfake pornography controversy, 2024).

- Legal and Employment Repercussions: Deepfakes have precipitated unwarranted legal actions and job terminations. In one notable case, a woman faced severe personal and professional consequences after a maliciously doctored video falsely portrayed her making racist remarks, leading to public outrage and potential legal action (I doorknocked for Labour, then racist deepfake ruined my life, 2024).

- Psychological Trauma: Victims often endure significant emotional distress. Breeze Liu, an AI startup founder, experienced severe emotional trauma after discovering nonconsensual explicit videos of herself online, highlighting the profound challenges victims face in removing such content (Deepfake Survivor Breeze Liu, 2024).

Case Studies Illustrating the Dangers of Deepfakes

Case Study 1: The Taylor Swift Deepfake Controversy

Background:

In January 2024, explicit AI-generated images falsely depicting renowned artist Taylor Swift surfaced across multiple social media platforms.

Impact:

The rapid dissemination of these fabricated images sparked widespread condemnation from fans, advocacy groups, and public figures. The incident prompted discussions about the ethical use of AI in content creation. It led to legislative proposals aimed at criminalizing the distribution of nonconsensual deepfake pornography (Taylor Swift deepfake pornography controversy, 2024).

Outcome:

The controversy underscored the urgent need for robust legal frameworks to protect individuals from AI-generated defamation and exploitation.

Case Study 2: Political Manipulation Through Deepfake Audio

Background:

In the lead-up to the 2024 U.S. presidential election, an audio deepfake emerged, falsely portraying a candidate making controversial statements.

Impact:

The fabricated audio clip gained traction on social media, leading to misinformation and confusion among voters. This incident exemplifies how deepfakes can be used to undermine democratic processes and manipulate public opinion (The Rise of Deepfakes, 2024).

Outcome:

The event highlighted the necessity for advanced detection technologies and public awareness campaigns to discern authentic content from manipulative fabrications.

Case Study 3: Nonconsensual Deepfake Pornography and Legal Challenges

Background:

In August 2024, San Francisco filed a groundbreaking lawsuit against operators of AI tools that create deepfake nude images, aiming to combat the proliferation of nonconsensual explicit content (San Francisco lawsuit targets AI deepfake nudes, 2024).

Impact:

The lawsuit addressed the significant harm caused by such technologies, including bullying, threats, and severe mental health issues among victims. The legal action faced challenges due to the anonymity of operators and their international bases, but it set a precedent for future cases.

Outcome:

This legal initiative underscored the complexities of regulating AI-generated content and the need for comprehensive policies to protect individuals from deepfake exploitation.

Proactive Measures and Prevention Protocols

Given the escalating threat posed by deepfakes, a multifaceted approach is imperative:

- Education and Awareness: Schools and parents must educate vulnerable populations, including youth and the elderly, about deepfakes’ existence and potential dangers. Promoting critical thinking and media literacy can empower individuals to question and verify the authenticity of online content.

- Technological Solutions: Investment in AI-driven detection tools is crucial. For example, in 2024, OpenAI developed a tool capable of identifying images generated by its DALL-E 3 model with approximately 98% accuracy, aiding in the discernment of authentic content (Artificial intelligence art, 2024).

- Legal Frameworks: Robust policies and laws are essential to deter the creation and dissemination of malicious deepfakes. Legislative measures, such as those proposed following high-profile incidents, aim to criminalize nonconsensual AI-generated explicit content and provide avenues for victim recourse (Taylor Swift deepfake pornography controversy, 2024).

- Collaboration with Platforms: Social media companies must implement stringent policies to detect and remove deepfake content promptly. Collaborative efforts between technology firms, policymakers, and civil society can foster a safer online environment.

Conclusion

The advent of AI-generated deepfakes presents a formidable challenge to personal integrity, public trust, and societal well-being. As this technology becomes increasingly accessible, the potential for misuse escalates, necessitating proactive measures. Schools, parents, and lawmakers must remain vigilant in combating the spread of deceptive AI-generated content, advocating for policies that protect victims, and fostering public awareness to mitigate the harmful effects of deepfakes in the digital age.

References

- Artificial intelligence art. (2024). Wikipedia. Retrieved from https://en.wikipedia.org/wiki/Artificial_intelligence_art

- Deepfake Survivor Breeze Liu. (2024). Wired. Retrieved from https://www.wired.com/story/deepfake-survivor-breeze-liu-microsoft

- Eftsure. (n.d.). Deepfake statistics. Eftsure. Retrieved from https://eftsure.com/statistics/deepfake-statistics

- I doorknocked for Labour, then racist deepfake ruined my life. (2024). The Times UK. Retrieved from https://www.thetimes.co.uk/article/i-doorknocked-for-labour-then-racist-deepfake-ruined-my-life-fn6xxc5dc

- San Francisco lawsuit targets AI deepfake nudes. (2024). Politico. Retrieved from https://www.politico.com/news/2024/08/17/san-francisco-lawsuit-ai-deepfake-nudes

- Taylor Swift deepfake pornography controversy. (2024). Wikipedia. Retrieved from https://en.wikipedia.org/wiki/Taylor_Swift_deepfake_pornography_controversy

The rise of deepfakes. (2024). Mishcon de Reya LLP. Retrieved from https://www.mishcon.com/news/the-rise-of-deepfakes-navigating-their-impact-on-reputation-and-business - University of Florida News. (2024). Deepfake audio and misinformation. Retrieved from https://news.ufl.edu/2024/11/deepfakes-audio