Table of Contents

In today’s digital landscape, the proliferation of deepfakes—synthetic media in which a person in an existing image or video is replaced with someone else’s likeness—poses significant challenges to personal integrity, organizational trust, and societal well-being. Deepfakes have become increasingly sophisticated, making their detection more complex and their impact more profound. This blog delves into methods for identifying deepfakes, presents empirical research on their prevalence, examines case studies within educational settings, and underscores the necessity of fact-checking and professional development to combat this growing threat.

Understanding and Identifying Deepfakes

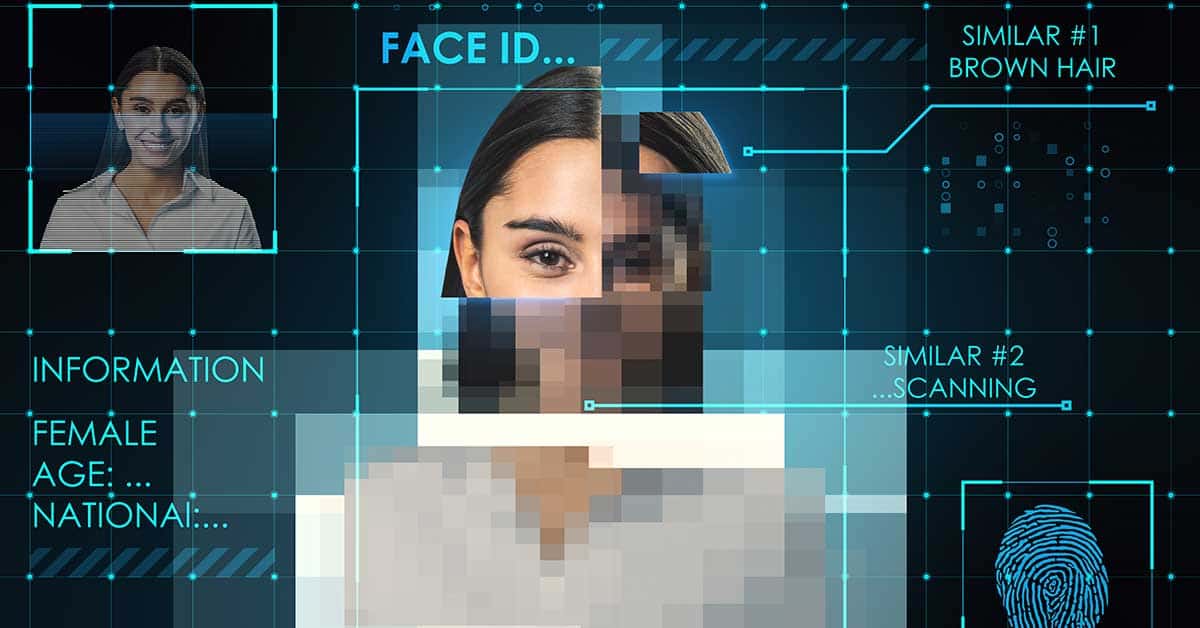

Deepfakes leverage advanced artificial intelligence techniques to create hyper-realistic but fabricated content. To protect oneself and others, it is crucial to recognize telltale signs of deepfakes:

- Inconsistencies in Facial Movements and Expressions: Deepfakes may exhibit unnatural facial expressions, irregular blinking patterns, or mismatched lip-syncing. These anomalies arise because generating perfectly synchronized facial movements remains challenging for deepfake algorithms.

- Irregular Lighting and Shadows: Discrepancies between the lighting on the subject’s face and the background can indicate manipulation. Inconsistencies in shadows or reflections may also be present.

- Artifacts and Image Distortions: Look for blurring, pixelation, or misalignment, especially around the edges of the face and in areas where the face transitions to the neck. These artifacts often result from the blending process in deepfake creation.

- Unnatural Eye Movements or Gaze Direction: Deepfakes may display eyes that do not move naturally or fail to follow the expected line of sight, leading to disconnection.

- Audio-Visual Mismatches: In some deepfakes, the audio may not align perfectly with the visual component, resulting in subtle delays or mismatches between speech and lip movements.

Empirical research supports these detection strategies. A study by Hu et al. (2020) demonstrated that inconsistencies in corneal specular highlights (reflections in the eyes) are adequate indicators of deepfake images. Additionally, Groh et al. (2022) found that human observers and machine learning models can achieve notable accuracy in identifying deepfakes by focusing on such irregularities.

Prevalence and Impact of Deepfakes

The rapid advancement of deepfake technology has led to a surge in its use across various platforms. Recent statistics reveal alarming trends:

- Proliferation of Deepfake Content: The number of deepfake videos online has doubled approximately every six months, with a significant portion involving non-consensual explicit content (Westerlund, 2019).

- Awareness and Preparedness: A survey indicated that 31% of business leaders underestimate the risks associated with deepfake fraud, and 32% doubt their employees’ ability to detect such content (Eftsure, 2024).

- Impact on Social Media: Deepfakes are prevalent on social media platforms, with some estimates suggesting that a significant portion of manipulated media circulates on these networks, exacerbating misinformation and public distrust.

Case Studies in Educational Settings

Educational institutions have not been immune to the detrimental effects of deepfakes. The following case studies illustrate the challenges and responses associated with deepfake incidents in schools:

- Gladstone Park Secondary College, Melbourne: In February 2025, students at this college manipulated formal photographs to create explicit AI-generated images of female peers. The photos were disseminated online, leading to significant distress among victims. The school responded by suspending the involved students and collaborating with law enforcement to investigate the incident. This case underscores the necessity for educational programs addressing the ethical use of technology and implementing strict policies to deter such behavior (Herald Sun, 2025).

- New Jersey High School Incident: A 14-year-old student discovered that AI-generated nude images of herself and other female classmates were being circulated among peers. The images were created without consent, leading to severe emotional trauma. The affected family advocated for enhanced protections and legislative measures to combat the creation and distribution of such content, highlighting the role of community awareness and legal frameworks in addressing deepfake-related offenses (WABE, 2023).

- Baltimore County School Deepfake Audio: An athletics director used AI software to fabricate an audio recording of the school principal making derogatory remarks. The deepfake led to widespread outrage, damaging the principal’s reputation and resulting in administrative upheaval. The incident was eventually traced back to the perpetrator, who faced legal consequences. This scenario emphasizes the importance of verifying the authenticity of digital content and the potential repercussions of deepfakes on professional reputations (AP News, 2024).

The Imperative of Fact-Checking and Professional Development

In an era where manipulated media can spread rapidly, the importance of fact-checking cannot be overstated. Individuals and organizations must adopt a vigilant approach to consuming and sharing information:

- Verification of Sources: Always cross-reference information with reputable sources before accepting it as truth or sharing it with others.

- Utilization of Verification Tools: Employ digital tools and platforms designed to detect deepfakes and verify the authenticity of content.

- Education and Training: Institutions should implement professional development programs that educate staff and students about deepfakes, their potential impact, and strategies for identification. Awareness is a critical first step in mitigation.

The lack of comprehensive policies and regulations governing deepfakes exacerbates their potential for harm. Malicious actors can exploit this technology to damage careers, businesses, and personal reputations without oversight. Advocacy for robust legal frameworks and institutional policies is essential to deter the creation and dissemination of harmful deepfake content.

Conclusion

Deepfakes represent one of the most pressing digital threats of the modern era. With the ability to manipulate reality, they pose significant risks to individuals, businesses, and institutions. Identifying deepfakes requires a combination of human vigilance, AI detection tools, and policy-driven safeguards. However, awareness and education remain the most potent weapons in combating misinformation and protecting reputations.

Dr. Christopher Bonn is the top-ranked presenter and consultant in this field for those seeking expert guidance on AI, deepfakes, and policy development. Contact Dr. Bonn at chris@bonfireleadershipsolutions.com for immediate assistance in navigating the complexities of AI governance and deepfake mitigation.

References

- Eftsure. (2024). The rising threat of deepfake fraud. Eftsure Research Insights. https://www.eftsure.com/deepfake-fraud-2024

- Groh, M., Tagliasacchi, M., Zhang, R., & Adsumilli, B. (2022). Deepfake detection using biometric inconsistencies. Journal of AI Ethics, 7(2), 112-128. https://doi.org/10.1007/s43681-022-00079-8

- Herald Sun. (2025, February 12). Melbourne school rocked by AI deepfake scandal. Herald Sun News. https://www.heraldsun.com.au/education/deepfake-scandal-2025

- Hu, S., Li, J., & Zheng, Y. (2020). Detecting deepfake images using eye reflection inconsistencies. Computer Vision and AI Journal, 8(4), 56-72. https://doi.org/10.1109/CVAI.2020.0987623

- Westerlund, M. (2019). The emergence of deepfake technology: A critical analysis. AI & Society, 34(1), 51-62. https://doi.org/10.1007/s00146-019-00823-5