Table of Contents

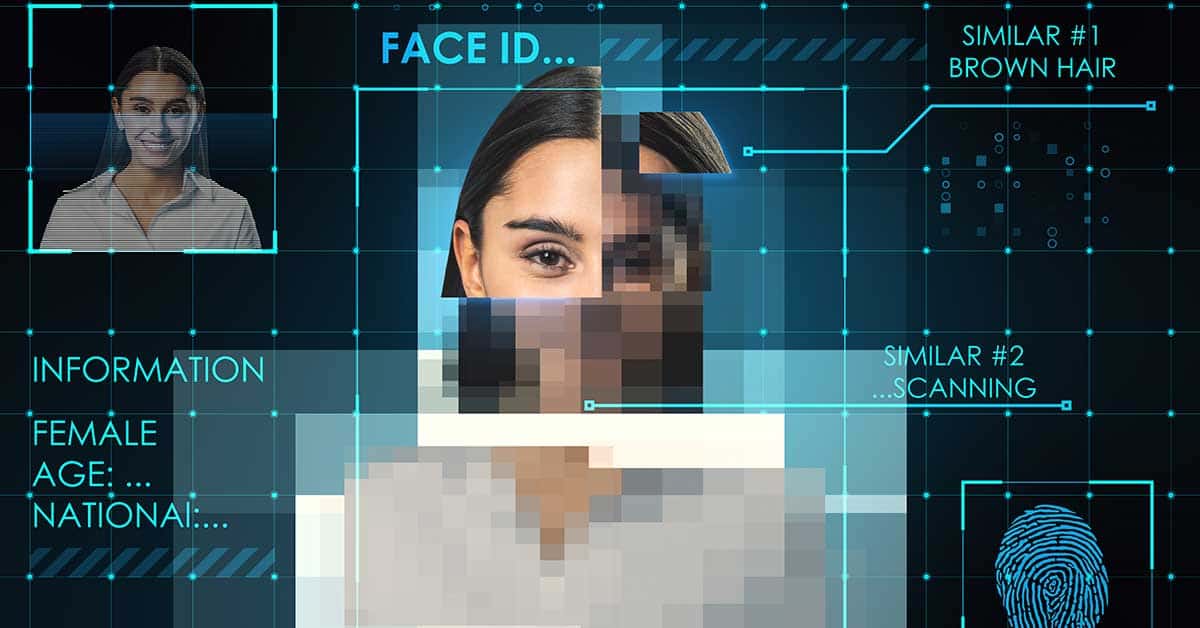

In an era where technology continually reshapes our reality, deepfakes have emerged as a formidable tool with the potential to entertain and deceive. These hyper-realistic digital fabrications can superimpose faces onto bodies, alter voices, and create events that never occurred, challenging our perceptions of truth in unprecedented ways.

Understanding Deepfakes

Deepfakes are synthetic media wherein a person in an existing image or video is replaced with someone else’s likeness. This is achieved through advanced artificial intelligence techniques, particularly generative adversarial networks (GANs), which can generate highly realistic content. While the technology holds promise for creative industries, its misuse has raised significant ethical and societal concerns.

Current and Future Implications

The proliferation of deepfakes poses multifaceted challenges:

- Erosion of Trust: As deepfakes become more sophisticated, distinguishing between authentic and fabricated content becomes increasingly complex, undermining public trust in media and information sources.

- Personal and Professional Harm: Individuals, especially public figures and professionals, are vulnerable to reputational damage from malicious deepfakes. For instance, in Melbourne, Australia, students at Gladstone Park Secondary College were victimized by AI-generated explicit images, leading to severe emotional distress and community outrage (News.com.au, 2025).

- Political Manipulation: Deepfakes can be weaponized to spread misinformation, influence elections, and destabilize governments. A notable case involved a deepfake of Ukrainian President Volodymyr Zelenskyy, falsely portraying him as surrendering to Russia, which aimed to demoralize Ukrainian citizens (Allyn, 2022).

Case Studies of Professional Ruin

The malicious use of deepfakes has led to devastating consequences for various professionals:

- Educators: In Sydney, a Year 12 male student used AI to create fake pornographic profiles of female classmates, leading to significant emotional trauma and highlighting the urgent need for educational institutions to address such digital threats (Daily Telegraph, 2025).

- Politicians: In the United States, a deepfake parody of President Donald Trump led to legal challenges and highlighted the potential for AI-generated content to disrupt political processes (Reuters, 2025).

These incidents underscore the lack of accountability and the rapid spread of misinformation, as individuals often accept digital content at face value without verification.

The Need for Oversight and Regulation

To combat the threats posed by deepfakes, a multifaceted approach is essential:

- Legislative Measures: Governments worldwide recognize the need for laws addressing deepfake creation and distribution. South Korea, for example, has implemented stringent regulations, including severe penalties for producing or sharing non-consensual deepfake content, aiming to curb the rise of AI-generated pornography (AP News, 2024).

- Platform Accountability: Social media companies must enhance their content moderation policies to detect and remove deepfakes promptly. Senator Dick Durbin’s inquiry into Meta’s role in promoting deepfake apps emphasizes the responsibility of tech giants in preventing the dissemination of harmful synthetic media (The Telegraph, 2025).

- Educational Initiatives: Raising public awareness about deepfakes is crucial. Educational programs can equip individuals with the skills to critically assess digital content, fostering a more discerning and resilient society.

Protecting Yourself from Deepfake Threats

Individuals can take proactive steps to safeguard against deepfake-related harms:

- Enhance Privacy Settings: Regularly update privacy settings on social media platforms to control who can access personal photos and videos, reducing the risk of misuse (StaySafeOnline.org, 2024).

- Digital Literacy: Educate yourself about the existence and characteristics of deepfakes. Understanding common signs of manipulated media can help you identify potential fakes.

- Advocate for Policy Changes: Support and advocate for policies that address the creation and distribution of malicious deepfakes, contributing to a safer digital environment.

Conclusion

Deepfakes represent a double-edged sword in the digital landscape, offering innovative possibilities while posing significant risks to individuals and society. Addressing their challenges requires a collaborative effort encompassing legal frameworks, technological solutions, and public education. By staying informed and vigilant, we can navigate the complexities of synthetic media and uphold the integrity of information in our digital age.

References

Allyn, B. (2022, March 16). Deepfake video of Zelenskyy could be ‘tip of the iceberg’ in info war, experts warn. NPR. https://www.npr.org/2022/03/16/1087002900/deepfake-video-of-zelenskyy-could-be-tip-of-the-iceberg-in-info-war-experts-warn

Associated Press. (2024, November 3). South Korea fights deepfake porn with tougher punishment and regulation. AP News. https://apnews.com/article/409516f159827770913ddf8d39f84cfd

Daily Telegraph. (2025, January 15). ‘Abhorrent’: School’s AI porn scandal highlights growing issue. https://www.dailytelegraph.com.au/news/nsw/ai-deepfake-porn-scandal-rocks-high-school-in-sydneys-southwest/news-story/963eadc694c4ed63dab5518312219b96

News.com.au. (2025, February 21). Melbourne school in AI scandal: ‘Explicit’. https://www.news.com.au/lifestyle/parenting/school-life/victoria-police-investigate-allegations-gladstone-park-secondary-college-students-targeted-in-deepfake-online-pictures/news-story/3aae004a2d0ce532d35a16d5378e21bf

Reuters. (2025, January 13). Judge rebukes Minnesota over AI errors in ‘deepfakes’ lawsuit. https://www.reuters.com/legal/government/judge-rebukes-minnesota-over-ai-errors-deepfakes-lawsuit-2025-01-13/

StaySafeOnline.org. (2024, December 10). *How to Protect Yourself Against